Image reprinted from Campaign US article

Image reprinted from Campaign US article

This article was co-written by Ready State Content Director Derek Slater and first published on Campaign US.

When we started this series on artificial intelligence in marketing, we thought that marketers weren’t paying enough attention to the potential of machine learning in particular. (Refresher: Machine learning refers to the ability of a computer program to learn from experience, getting better at a task by looking at data instead of requiring new programming.)

Six months later, it looks like we already have the opposite problem. The AI hype train is roaring out of the station; it feels like every as-a-service offering is touting a machine-learning capability. Now marketers are paying attention but have to sort out reality from instances of "machine washing," the mislabeling of services that aren’t really any form of AI.

There’s plenty of reality. Machine learning and early AI products are used not only in recommendation engines and programmatic ad buying, but also are applied to customer segmentation, multivariate A/B testing, customizing websites down to an audience of one, and more.

Marketers and creatives—and their employers and agencies and clients—will reap tremendous gains from this explosion of machine marketing. The question is: What will our role really be?

As a CMO and a content creator, respectively, we first expected to be able to easily reassure agency creatives that there is nothing to worry about. But in our research, and in recent pilots with the technology, we’ve come to realize that AI might hurt a little, if we aren’t prepared for it. On the SAMR (substitution, augmentation, modification, redefinition) curve that disruptive technologies trace, AI is already entering the augmentation phase, which means that it’s affecting jobs.

Anything that affects jobs meets some amount of knee-jerk resistance. But resistance to change didn’t stop mobile, social, native, programmatic, or any of the other major advances that have encountered some initial skepticism and growing pains. Ignoring the advance of AI won’t save your job or your agency. This is a curve you want to be ahead of, not behind.

Good news: We have plenty of creative work to do, in order to reach our (human) customers. AI can assist, but you still have to take the lead.

Machine marketing meets creative

AI may indeed be intelligent, but it’s still artificial. Not human.

One of the key roles that we play as marketers is understanding people, and pulling the right levers to excite, incite, coax, or flatter them into taking action. Right now, AIs may be helpful in removing grunt work from our plates, but they don’t deal in emotions.

It’s our job as creatives to bring the human connection. To do that, we need to make sure that the technology we use:

- solves a real audience pain point, doing something valuable for the customer;

- creates on-brand experiences, and that the brand can own;

- and offers intuitive interactions that surprise and delight.

By bringing this focus to the work, creative professionals can help turn new AI tech from a gimmick into a business advantage. Here are three immediate areas where AI needs your help.

Bring the voice and tone

For copywriters and content creators, AI needs guidance on voice and tone. This is a bigger challenge than simply selecting a set of words and phrases. Chatbots are a great illustration of this larger task in action.

The best bots today are especially good at helping brands when customers want specific information that is standard, but may not be readily available, or tedious to look up themselves. However, the answers are still pretty scripted, and the humans behind the bots have to pay attention to how the bots sound.

A bot that sounds like any generic data collector doesn’t do anything for the brand. Bots can have their own personalities but should still be consistent with the brand.

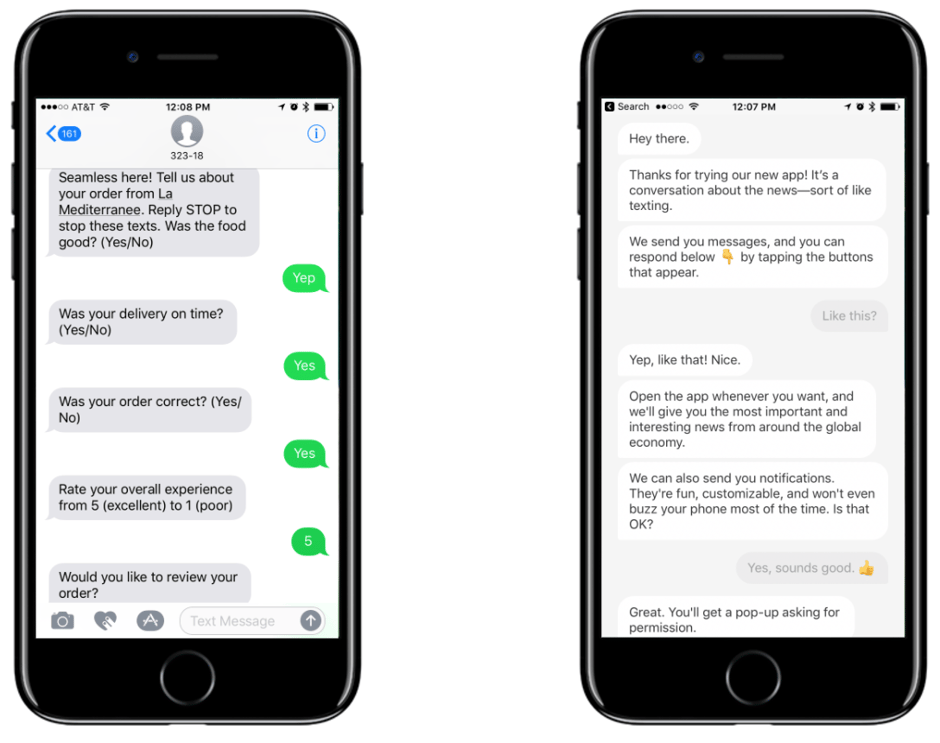

In this comparison of two recent bot conversations, you see the Seamless SMS bot on the left, and the Quartz news app on the right. The Seamless bot felt cold and intrusive, while the Quartz bot felt friendly and gave a new sense of the Quartz brand. Screenshots by Steven Wong/Ready State

The first time I realized the emotion a bot could deliver was reading Casey Newton’s fantastic story about how San Francisco entrepreneur Eugenia Kuyda built a ‘memorial bot’ for her dearly departed friend Roman Mazurenko. Kuyda collected a trove of text messages from their mutual friends, and taught a bot to speak like Roman. Reading through the chat logs really got to me. In one exchange a friend asks the Roman bot why he is feeling weird. Roman answers, “Because I don’t have this connection with you guys that I have in Moscow. I feel like I’m floating here, and everything becomes harder. But I need to stop whining and move on.” Then in response to the friend saying, “I miss you,” Roman simply says, “Me too.”

Obviously, strong emotions are not suitable for all brands, and a no-nonsense, all-business voice could be exactly what’s called for. The role of the creative is to figure that out, and to combine the power of the brand voice with the mechanics of natural language processing.

Natural-language AIs have gotten pretty good at understanding what is being asked, and matching the questions with specific scenarios, or intents. We need to anticipate what these intents are, and craft answers that help the customer, in the voice of the brand. Then we can review the chat logs for mistakes so that we can help train the AI to better understand or respond to what the customers are saying.

At a higher level, we also need to clearly position the bot within the context of the brand. Where does it fit in the channel mix, and which customers will get the most value from a bot? Do you want to humanize the bot, or explicitly describe it as a tool? (Turns out, people may prefer bots with no names.) How does it diffuse a tense or negative situation? How do you navigate the human-bot tango, figuring out when the bot pulls in a human or proactively steps into a conversation?

These voice and tone considerations apply to more than just bots. Creatives will need to similarly engage with conversational UIs that send emails, personalize site copy and, of course, speak.

Bring the look and feel

Look-and-feel is the other side of the voice-and-tone coin, and there is as much AI activity in visuals as in language. If you’re in repetitive production work, that type of job is realistically at risk, because computers are rapidly delivering automation to increasingly sophisticated production tasks.

But as with voice-and-tone, humans are required to make visual experiences emotionally connect with the customer.

Let’s look at things AI and machine learning can do on the visual side.

A very simple example: One of our clients, working for a sports-related company that makes money from sponsorships, built a machine-learning system that can watch a video feed and identify when corporate logos appear. This provides the sponsors with proof of how much airtime or exposure their sponsorship dollars have purchased. This simple but tedious task, recognizing images, is the kind of thing computers didn’t do very well until the last few years.

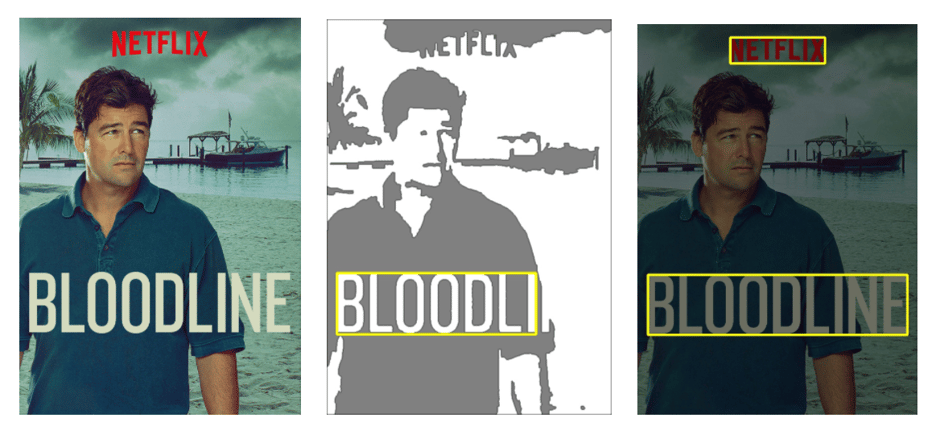

This second example illustrates a more sophisticated augmentation of past practices. Netflix has a huge library of movies and shows. A significant chunk of its customers’ video consumption is driven through Netflix’s “you might also like”-style recommendations, and each of the recommendations is accompanied by a visual from the video.

You won’t be surprised that the company uses machine-learning analysis of user behavior to choose which recommendations to show each customer. What might surprise you is how they use a different machine-learning technology to select, label, and crop the promotional images. The system has to recognize faces and other key visual elements, recognize text appearing in an image, and so on. Creating these promotional blocks for tens of thousands of movies and episodes would occupy a team of human designers. But AI is doing that work instead.

Netflix uses AI’s visual-recognition powers to automatically grab and edit effective promotional images for its content recommendation engine. The company says these images drive online-video consumption. Credit: Netflix

Netflix uses AI’s visual-recognition powers to automatically grab and edit effective promotional images for its content recommendation engine. The company says these images drive online-video consumption. Credit: Netflix

Other examples show AI at work still further up the design stack.

Smashing Magazine recently ran this mind-expanding piece on algorithm-driven design demonstrating what’s already possible when human designers augment their work with machine intelligence. The article features enough tools and ideas to keep a creative technologist busy for the rest of 2017. The systems’ roles include discovering rules, creating variations, applying stylized filters, matching colors, and modeling full user experiences.

In building visually complex marketing tools like ads, landing pages, and websites, humans generally still need to set boundaries, making those choices that define the brand. With the definition in place, machine learning and AI are useful for optimizing the way users experience the brand.

We’ve done some preliminary work with a Silicon Valley AI company that provides automated testing of variations and placement on a Web page to maximize performance. To run that kind of experiment at scale, you’d ideally have a lot of traffic, as well as a lot of assets to test. AI can also lend a hand in creating those assets when they are variations on an established theme; tools such as Prisma and Pikazo show how AI can learn and apply a “style” to a new image, once again relieving the designer from having to busy-work dozens of versions of the same graphic.

Where we don’t yet see machines showing a lot of capability is in delivering “surprise and delight.” AI is great at finding hidden rules, but not as good at finding where you can *break* the rules. Look at the well-known example of Ling’s Cars. It’s been written up many times so we won’t rehash it here, but this very successful, shocking visual approach is intended to evoke an emotional (human) reaction.

At present, an AI designer tool like The Grid is unlikely to crank out a Ling’s Cars design (unless we feed the AI a lot of data so it can figure out why Ling's Cars works). That leaves another window open where humans can deliver things machine marketing can’t.

Creatives take on a new role as teachers

One thing is for sure: Very soon, we will have the opportunity to do a lot of creative work in the AI marketing space—from defining the voice for conversational UIs, to teaching more sophisticated natural-language systems new skills, to figuring out which visuals work best in different situations, to creating experiences designed for your best customers.

As an agency, we’re looking at how we’re structured to make sure we’re able to test and learn from these new technologies. It might mean big change, or it might not, but ultimately, it ensures that we’re able to do what’s best for our creatives, our agency, and our clients.